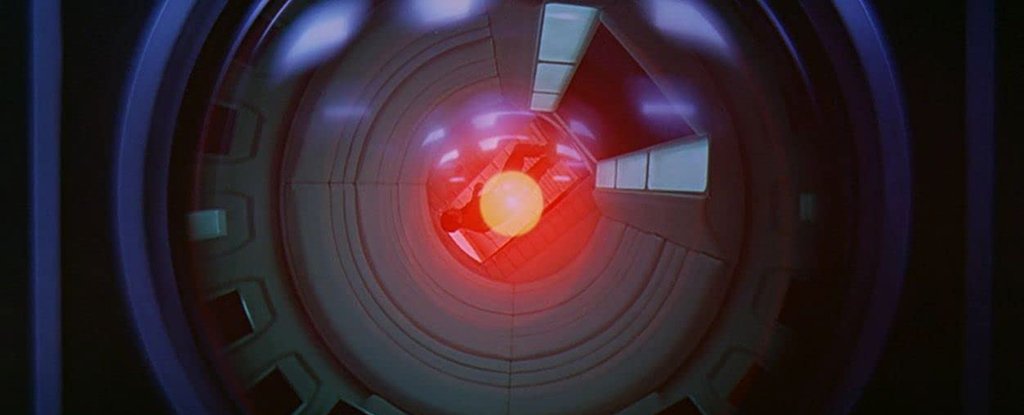

AlphaZero: The Birth of Skynet

The idea of artificial intelligence overthrowing humankind has been talked about for many decades, and scientists have just delivered their verdict on whether we’d be able to control a high-level computer super-intelligence. The answer?

Capital Thinking · Issue #769 · View online

The idea of artificial intelligence overthrowing humankind has been talked about for many decades, and scientists have just delivered their verdict on whether we’d be able to control a high-level computer super-intelligence.

The answer?

Almost definitely not.

Calculations Show It’ll Be Impossible to Control a Super-Intelligent AI

The catch is that controlling a super-intelligence far beyond human comprehension would require a simulation of that super-intelligence which we can analyse. But if we’re unable to comprehend it, it’s impossible to create such a simulation.

Rules such as ‘cause no harm to humans’ can’t be set if we don’t understand the kind of scenarios that an AI is going to come up with, suggest the authors of the new paper. Once a computer system is working on a level above the scope of our programmers, we can no longer set limits.

“A super-intelligence poses a fundamentally different problem than those typically studied under the banner of ‘robot ethics’,” write the researchers.

“This is because a superintelligence is multi-faceted, and therefore potentially capable of mobilising a diversity of resources in order to achieve objectives that are potentially incomprehensible to humans, let alone controllable.”

Part of the team’s reasoning comes from the halting problem put forward by Alan Turing in 1936. The problem centres on knowing whether or not a computer program will reach a conclusion and answer (so it halts), or simply loop forever trying to find one.

Photo credit: Pospssessed Photography on Unsplash

Losing Your Place in the Food Chain

Of all the subjects we cover in this publication, machine learning, or as we call it, artificial intuition, is the most important with the greatest implications for the human race.

We have written for years that machine learning is accelerating at a pace beyond our comprehension.

If AlphaZero can achieve this mastery in such a short period of time, where will artificial intuition be in one year?

Two years? Five years?

On its own, in just a few hours of experimental self-play, AlphaZero blew past a level of Chess mastery that took humans over 1,500 years to attain.

Many years ago we wrote a paper in college predicting that upon birth a computer chip — comprising all of human knowledge and wisdom — would be implanted in our brains so that humanity wouldn’t keep repeating the same mistakes that had been made over thousands of years.

Depending upon which vantage point you take, artificial intuition is the most important development in the history of the human race or the most dangerous.

In WILTWs December 14, 2017 and December 21, 2017, we began to explore the implications of DeepMind’s AlphaZero algorithm achieving a superhuman level of play in Chess within a few hours of “tabula rasa” self-play.

In early December, AlphaZero defeated Stockfish — the reigning Chess program — within four hours or 300k “training steps.”

In the Japanese game Shogi, AlphaZero outperformed the ranking computer champion — Elmo — in less than two hours or 110k steps.

International Chess Grandmaster, Viswanathan Anand, underscores that AlphaZero’s ability to figure “everything out from scratch…is scary and promising…”

One can only wonder what level of learning AlphaZero could reach if it kept playing for days or weeks.

We recently read Life 3.0: Being Human in an Age of Artificial Intelligence by MIT professor Max Tegmark and Homo Deus by historian Yuval Noah Harari.

Both books explore the ominous implications of the emerging AI era. The world is at a major inflection point that will determine our AI future and perhaps the survival of the human species.

What are the key implications?

- The rate of improvement in machine learning is accelerating at a mind- boggling rate. In the last month, China’s Alibaba has developed an AI that beat humans in a Stanford University reading and comprehension test. Japanese researchers also created a neural network that can read and interpret complex human thoughts — surpassing prior achievements.

- Governments realize that whoever becomes the leader in AI could rule the world and are investing huge sums to take the lead. No doubt Russia, Israel and Iran, to name a few, are working intensely on it. Earlier this month, Beijing announced plans to build a $2 billion AI research park. The Chinese government is also building a $10 billion quantum computer center, and has opened a national lab — operated by Baidu — dedicated to making the nation more competitive in machine learning. Additionally, Alibaba is doubling its R&D spending to $15 billion to focus on AI and quantum computing. As a result, it is probable DeepMind may not be the leader in deep learning systems, but is rather the only one that has chosen to publish its results.

- Superintelligent AI will increasingly be able to tackle complex problems — supercharged by quantum computing systems. Powerful AI systems may be able to find solutions to global grand challenges that have been unsolvable by humans, whether it is climate change, poverty, or lack of clean water. AlphaZero is an AI agent that can be transplanted into any other domain. Demis Hassabis, DeepMind’s founder, believes that AlphaZero- related algorithms can help design new ways of innovating, such as in drug discovery, and cheaper and more durable materials.

- But, smart AI systems are also a double-edged sword because they could also be harnessed by dark forces. Terrorists or predatory governments may ask AI systems the best way to hack the U.S. grid, or achieve an EMP attack without detonating an atom bomb in the atmosphere. Given the ability of algorithms to brilliantly strategize in Chess and GO, could it be easier for an AI system to hack a nation’s nuclear arsenal? A timely question considering last weekend’s apparent false missile alert in Hawaii.

- As change accelerates under machine learning, it may overload human nervous systems. In Homo Deus, Yuval Harari warns that “dataism” may prevail. Dataists believe that humans can no longer cope with the immense flows of data. Hence, humans cannot distill data into information, let alone into knowledge or wisdom.

Dataism is a potential new “cultural religion” that could engulf society as AI becomes pervasive.

It combines the premise that organisms are biochemical algorithms with computer-scientist ability to engineer increasingly-sophisticated electronic algorithms.

In essence, dataism argues that the same mathematical laws apply to both biochemical and electronic algorithms, thereby collapsing the barrier between animals [or humans] and machines.

“Beethoven’s Fifth Symphony, a stock-exchange bubble and the flu virus are just three patterns of data flow that can be analyzed using the same basic concepts and tools,” notes Harari.

In dataism, human experiences are not sacred and Homo Sapiens are not the peak of creation or a precursor to a future “Homo deus” — as humans seek immortality, happiness and divinity by upgrading themselves into the equivalent of gods via technology.

Instead, humans are simply tools for creating the Internet-of-All-Things that may ultimately spread beyond Earth to occupy the entire universe.

Initially, notes Harari, dataism may accelerate the humanist pursuit of health, happiness and power. However, “once authority shifts from humans to algorithms, humanist projects may become irrelevant.”

“We are striving to engineer the Internet-of-All-Things in the hope that it will make us healthy, happy and powerful. Yet once the Internet-of-All-Things is up and running, humans might be reduced from engineers to chips, then to data, and eventually we might dissolve within the torrent of data like a clump of earth within a gushing river. Datasim thereby threatens to do to Homo sapiens what Homo sapiens has done to all other animals.”